Supplementary Job SJ1.0: Difference between revisions

No edit summary |

|||

| Line 28: | Line 28: | ||

<math>\rightarrow</math> <code>xfaym</code> owned by Malcolm Roberts<br> |

<math>\rightarrow</math> <code>xfaym</code> owned by Malcolm Roberts<br> |

||

<math>\rightarrow</math> <code>xhcea</code> owned by Jane Mulcahy<br> |

<math>\rightarrow</math> <code>xhcea</code> owned by Jane Mulcahy<br> |

||

===Problems with initial dump=== |

|||

The original <code>xjgva/b</code> runs on MONSooN had problems in their initial dump files, in the <code>m01s00i278</code> (''MEAN WATER TABLE DEPTH M'') and <code>m01s00i281</code> (''SATURATION FRAC IN DEEP LAYER'') fields. There were <code>NaN</code>s in these two fields, and they had been present for a while in previous dumps. To remove these it was necessary to extract these fields, set the <code>NaN</code>s to a <code>_FillValue</code> or <code>missing_value</code>, and then make them up as ancillary files. This appears to have fixed this problem. |

|||

More information can be found on NCAS-CMS [http://cms.ncas.ac.uk/ticket/1622 ticket #1622]. |

|||

===Scaling (ARCHER)=== |

===Scaling (ARCHER)=== |

||

Revision as of 10:02, 10 August 2015

This page documents the vn8.4 GA4.0 N216L85 supplementary job SJ1.0 (xjgvb/c on MONSooN and xjgve/? on ARCHER).

This job has been developed by Luke Abraham with help from several others.

It is a continuation of GA4.0 N216 Development - more information can be found on that page.

Base Model

The base atmosphere model used here is the GA4.0 configuration. More information on GA4.0 development can be found on Global Atmosphere 4.0/Global Land 4.0 documentation pages (password required). A GMD paper documenting this model is also available.

The configuration is based on the Met Office job anenj (via MONSooN job xhmaj) which is derived from amche (the standard GA4.0 N96L85 interactive dust model) via

vn8.4 N216 development

amche (base vn8.4 GA4.0 N96L85 job) owned by Dan Copsey

xipvp (MONSooN) owned by Jeremy Walton

xjgva (made N216 by comparing aliur to ajthw) owned by Luke Abraham

xjgvb initialised from 1999-12-01 dump from xjgva, and made a TS2000 set-up (c.f. RJ4.0 settings)

xjgve copied to ARCHER

reference vn8.0 jobs

- N96

aliur (base vn8.0 GA4.0 N96L85 job)

xipvo (MONSooN) owned by Jeremy Walton

- N216

ajthw (base vn8.0 GA4.0 N216L85 job)

xfthi (MONSooN) owned by Oliver Derbyshire

xfaym owned by Malcolm Roberts

xfaym owned by Malcolm Roberts

xhcea owned by Jane Mulcahy

Problems with initial dump

The original xjgva/b runs on MONSooN had problems in their initial dump files, in the m01s00i278 (MEAN WATER TABLE DEPTH M) and m01s00i281 (SATURATION FRAC IN DEEP LAYER) fields. There were NaNs in these two fields, and they had been present for a while in previous dumps. To remove these it was necessary to extract these fields, set the NaNs to a _FillValue or missing_value, and then make them up as ancillary files. This appears to have fixed this problem.

More information can be found on NCAS-CMS ticket #1622.

Scaling (ARCHER)

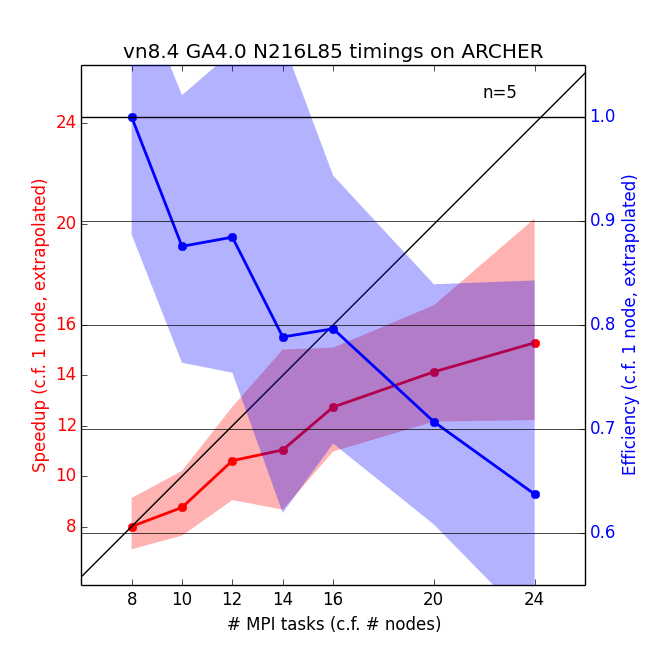

Each compute node contains two 2.7 GHz, 12-core Ivy Bridge processors, which can support 2 hardware threads, and there is 64GB of RAM per node. Due to memory restrictions, the N216L85 configuration is unable to run on less than 8 nodes (so the 8-node,20-minute short queue is available for debugging). All simulations used 2 OpenMP threads with 12 MPI tasks per node (halving the number of cores available per node).

Scaling tests have been done from 8 to 24 nodes of ARCHER with a series of 2-day runs, with the results presented below.

From these tests, it is recommended to use 12 nodes, in a 12EW x 12NS domain decomposition.

cumf tests (ARCHER)

It is useful to perform cumf tests to ensure that the model behaves as expected. The utility can be found at /work/n02/n02/hum/vn8.4/cce/utils/cumf.

Climate meaning was still on for these tests, so the temporary partial sums will still exist in the dumps. These are expected to be different between some of the tests.

NRUN-NRUN tests (ARCHER)

For this test the model is run twice. The 2-day dumps are then compared. For this test it makes no difference if you compare the dumps produced using daily-dumping or 2-day dumping when comparing to the equivalent dump produced using the same dumping frequency. In this case, the dumps compare.

COMPARE - SUMMARY MODE ----------------------- Number of fields in file 1 = 10884 Number of fields in file 2 = 10884 Number of fields compared = 10884 FIXED LENGTH HEADER: Number of differences = 3 INTEGER HEADER: Number of differences = 0 REAL HEADER: Number of differences = 0 LEVEL DEPENDENT CONSTANTS: Number of differences = 0 LOOKUP: Number of differences = 0 DATA FIELDS: Number of fields with differences = 0 files compare, ignoring Fixed Length Header

This means that there is NOT a fundamental problem with the model (i.e. uninitialised memory etc.). It will give you the same results if run again a second time (with everything else the same).

NRUN-CRUN tests (ARCHER)

For this test the 2nd day dump of a daily dumping run (which has been run in a single jobstep) is compared with the 2nd day dump of a run where this was produced on a CRUN step (i.e. where the 1st day dump was produced on the NRUN step). In this case, the dumps DO NOT compare.

COMPARE - SUMMARY MODE ----------------------- Number of fields in file 1 = 10884 Number of fields in file 2 = 10884 Number of fields compared = 10884 FIXED LENGTH HEADER: Number of differences = 3 INTEGER HEADER: Number of differences = 0 REAL HEADER: Number of differences = 3 LEVEL DEPENDENT CONSTANTS: Number of differences = 0 LOOKUP: Number of differences = 0 DATA FIELDS: Number of fields with differences = 8274 Field 1 : Stash Code 2 : U COMPNT OF WIND AFTER TIMESTEP : Number of differences = 140400 ...

This means that it is NOT possible to have job-steps of different lengths with reproducible results.

CRUN-CRUN tests (ARCHER)

For this test the model is run a second time as NRUN-CRUN jobsteps. The 2-day dump from the 1st NRUN-CRUN test (which was run in a single step) is then compared with this newly generated 2-day dump from the 2nd CRUN. This test bit-compares.

This means that it is possible to re-run a job-step, and assuming that the dump frequency is the same (and that they started from the same dump), then the results will be reproducible.

CRUN-NRUN tests (ARCHER)

For this test the model is run a second time as a NRUN from the 1st dump produced in a 2-day NRUN (or NRUN-CRUN) step, which uses 1-day dumping.. The 2nd-day dump from the 1st NRUN-NRUN/CRUN test is then compared with this newly generated 2nd-day dump from the 2nd NRUN. This test bit-compares.

This means that it is possible to make a new job, and continue it as a NRUN from an existing job, and the results will bit-compare.

change of dump frequency test (ARCHER)

In this test a 2-day long run is performed with daily dumping (in a single job-step), and then a 2-day run is performed with 2-day dumping. In this case, these dumps DO NOT bit-compare.

COMPARE - SUMMARY MODE ----------------------- Number of fields in file 1 = 10884 Number of fields in file 2 = 10989 Number of fields compared = 10884 Number of fields from file 2 omitted from comparison = 105 FIXED LENGTH HEADER: Number of differences = 4 INTEGER HEADER: Number of differences = 0 REAL HEADER: Number of differences = 3 LEVEL DEPENDENT CONSTANTS: Number of differences = 0 LOOKUP: Number of differences = 17454 DATA FIELDS: Number of fields with differences = 8438 Field 1 : Stash Code 2 : U COMPNT OF WIND AFTER TIMESTEP : Number of differences = 140400 ...

This means that on ARCHER, if you need to change the dump frequency for some reason at some point during a run, the run will NOT bit-compare to a run where this was not done. You should therefore try to maintain the 10-day dumping frequency.

change of domain decomposition test (ARCHER)

In this test a 2-day long run is performed with daily dumping (in a single job-step), and then an equivalent job is performed, but with a different domain decomposition. For this test, a 16EW x12NS was compared to a 12EW x 8NS. In this case, these dumps DO NOT bit-compare.

COMPARE - SUMMARY MODE ----------------------- Number of fields in file 1 = 10884 Number of fields in file 2 = 10884 Number of fields compared = 10884 FIXED LENGTH HEADER: Number of differences = 4 INTEGER HEADER: Number of differences = 0 REAL HEADER: Number of differences = 3 LEVEL DEPENDENT CONSTANTS: Number of differences = 0 LOOKUP: Number of differences = 0 DATA FIELDS: Number of fields with differences = 8409 Field 1 : Stash Code 2 : U COMPNT OF WIND AFTER TIMESTEP : Number of differences = 140400 ...

This means that on ARCHER, if you need to change the domain decomposition for any reason, the jobs will not bit-compare.

Contributions and Acknowledgements

Luke Abraham would like to thank the following people (in no particular order) for their help in creating this job:

- Jeremy Walton (Met Office)

- Malcolm Roberts (Met Office)

- Jane Mulcahy (Met Office)

- Maria Russo (NCAS, University of Cambridge)

- Marie-Estelle Demory (NCAS, University of Reading)

- NCAS-CMS